Goal is to set up a scalable architecture that can potentially hook up with Biztalk in near future. Essentially we are setting up "Business Process Management (BPM)" system. Recently my company established a partnership with a company (PNM Soft) that is one of the leaders in "BPM" software. I evaluated their software to be in a position to recommend it to my clients. It is a very powerful and easy to use software. Does not require much of coding and even a pure business user can design complex BPM processes using it.

My ongoing work in developing a scalable framework for my client using MOSS and other tools will also help me in determining the value added by the "BPM" software with respect to designing a BPM process from scratch using standard MSFT technologies.

Solution:

1) 2 Infopath forms ( First form is a brower enabled form that is published on "sharepoint", Second Form is infopath Task form that is published on "network"). I tried to get "Single" form to do both. But I ran into problems. Sharepoint won't let me publish the same form as "workflow" attachment and also as "sharepoint" enabled form. The second form is actually what you will call "Task Item" Form. It is one of the 4 forms that Workflow uses.

2) Workflow that is attached to a form library. Users publish the "first" form to the form library that triggers the "Workflow". This work flow is "sharepoint" sequential workflow.A good working demo can be found at:

http://weblog.vb-tech.com/nick/archive/2007/02/25/2207.aspx

Following are some helpful tips:

1) Cloning an infopath to create a second form is not a good practice. Infopath has a unique internal ID that will also copy over to new form and can create issues when both forms are used in a WF set up. I tried using one form as an Initiation form and second as Task Item form but could not get it working. I came across issue in passing parameters from work flow to the "Task Item" form. I am not aware of any workaround. But I think it is a good practice to create forms from scratch. I will not recommend the "schema first" approach for same reasons.

2) Make sure that the "network" published infopath forms (*.xsn) listed under Metadata section of "workflow.xml" (part of WF solution) exists and are under the correct folder location. Delete the references of forms not being used from "workflow.xml". Else you may get error like "Form is closed".

3) If you are using VSTA to code infopath forms. Make sure that the "dll" file is also part of the folder where *.xsn forms are located. Else you may be get an error " Type: InfoPathLocalizedException, Exception Message: The specified form cannot be found". Another thing that is to be kept in mind is use of "FormState" to define global variables. For browser enabled forms, there is need to preserve state across browser sessions. "FormState" helps you do that. The other option is to use workflow to pass the values into the "resource" file that is part of the infopath form. This "resource" file serves as "Secondary" data source and accepts values from Workflow and then updates the Infopath (Task form). I like this option better because using WorkFlow to maintain the state ensures that the "infopath" form is updated only after the workflow is executed. Thus making

sure that "state" the infopath work represents is actually the state that workflow

indicates. There is no need to use "FormState" property in latter case.

Example: Suppose user clicks on a check box on "Infopath Task form" indicating that the particular step is complete and workflow should flow to next step. If one preserves this state (of "check box" being clicked") using "FormState" then even if the workflow gives error the "checkbox" will still show as clicked and user will get an impression that the process is completed successfully as "check box" is clicked. But having workflow update this "checkbox" using "Extended Properties" ensures that this check box preserves the right state only after the workflow has done what it was suppose to do.

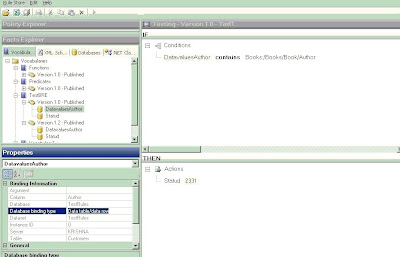

I noticed that order of populating "secondary data source" from workflow does matter. If the order is not correct the values will not propogate from workflow to secondary data source to Infopath form.Check out the image below:

Note: The order of fields in "secondary" data source schema matches the order in which WorkFlow assign values.

Use "Trusted Security" while publising forms with embedded VSTA code.

PS: VSTA and VSTO carry the same weight when used for "broswer" only forms.

4) Windows Worfklow dll needs to be GACed but the dll for VSTA code is not required to be GACed. But it needs to be copied to the same folder on network where the forms are published.

PS: Suppose you create a infopath form with embedded VSTA code and then you remove the code before "publishing" it. You still need to "copy the dll" file in the correct folder containing .xsn form. Else you will get "Form have been closed" error. I found this hard way!

5) Everytime infopath form is changed it should be republished to the network. Also updated dll should be copied. Unistall/Install the feature. Workflows that are already in progress will be updated with the new changes. Keep this is mind before applying the changes. Accordingly wait for "In Progress" workflows to finish.

6) There is no need to RE GAC the workflow dll if only infopath forms are changed. Also it is important to restart the IIS after GACing any new version updates. Restart ensures that the new changes come in effect.

7) Do not try to change the Unique ID of form by going to "File----> Properties" in design view. It seems that there is more than 1 place where this unique ID is referenced in the form. This is the same ID that is referenced in "workflow.xml" and indicates to the workflow which form should be opened as Task Form, Initiation Form, Association form and Modification

Form.

8) "check box" control in Infopath form can be changed from "Boolean" data type to "Text" data type and can be used to accept updates from workflow and this also gives more flexibility to

program it using VSTA and VSTO.

To Do this:

Go to Design View -----> DataSource-----> Double Click on the "check box control" ----->Following type window will "pop-up"----->Change Date Type from "Boolean" to "String"---> This will enable the icon "Fx" and then click there. Now you can select the value that you want to pass from "Secondary Data Source" . This seconday data source will be configured to receive the XML from workFlow

Also, double click on "check" box in form view and make the properties for the "check box" look like.

Also, double click on "check" box in form view and make the properties for the "check box" look like. Now when you pass values of "true" or "false" as string datatype from workflow, the check box will accept them.

Now when you pass values of "true" or "false" as string datatype from workflow, the check box will accept them.9) Use of Initiation form gives easy access the XML data stream that is following into WorkFlow. XML stream can be accessed through workflowProperties.InitiationData. I did not want to use Inititiation form as I wanted to "automatically" start WorkFlow when something new is published or something existing changes in Form Library. To do so you need to use following code in "WorkFlow Activity activated shape"

SPWeb thisWeb = new SPSite(workflowProperties.SiteId).OpenWeb(workflowProperties.WebId);

SPListItem thisItem = thisWeb.Lists[workflowProperties.ListId].GetItemById(workflowProperties.ItemId);

byte[] fileBytes = thisItem.File.OpenBinary();

UTF8Encoding encoding = new UTF8Encoding();

String xmlString;

xmlString = encoding.GetString(fileBytes);

xmlString = xmlString.Trim();

// Desiralization. InitForm is class generated from schema of incoming message

XmlSerializer serializer = new XmlSerializer(typeof(InitForm));

XmlTextReader reader = new XmlTextReader(new System.IO.StringReader(xmlString));

InitForm initform = (InitForm)serializer.Deserialize(reader);

At the end, I concluded that the BPM software is very efficient when it comes to designing pure BPM solutions. MSFT tools are definitely powerful in complex B2B scenario and integration with other systems. But they are "overkill" for simple BPM scenarios.

8) Run svcutil command to generate client class and config file

8) Run svcutil command to generate client class and config file

EndpointAddress epAddress = new EndpointAddress("http://localhost:8000/DemoWCF/Service/MathService");

EndpointAddress epAddress = new EndpointAddress("http://localhost:8000/DemoWCF/Service/MathService");

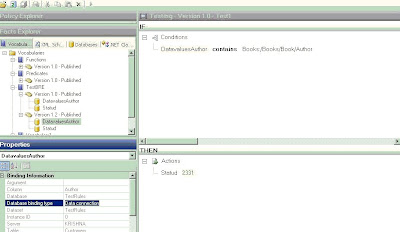

Image 2 (Database Binding type as DataConnection )

Image 2 (Database Binding type as DataConnection )

_511.gif)

_530.gif)