WCF aka Windows Communication Foundation services is latest product from MSFT for creating Distributive Applications. Year 2007 has been buzzing with new product releases on a weekly basis. It is sometimes hard to keep track of latest releases. I can speak for myself that I end up missing some new releases now and then. It is tough to keep one eye on client work and another eye on MSFT press releases. Now it has become part of job. Working in consulting sector is a 24/7 job. When you are not working with client, you are scanning different websites looking for bits and bytes on latest product releases and technology trends. I do love my job but sometimes it gets really tough to keep up with the pace of technology changes. Sometimes you have to be like Tiger Woods and chose to leave. If only everyone was as good as him!

Distributive Technologies have become center of turf war for technology companies. Webservices is an important piece in this war. Turf is dotted with companies like Java and also open source products like Tungsten.

WCF is a further enhancement of ASMX webservice released with .Net framework 2.0. WCF is part of .net framework 3.0 version. Version 3.5 is out too in form of beta version. WCF lets users get benefit of ASMX , WCE and messaging services as one product. WCF supports MSMQ, HTTP and TCP protocol. ASMX webservice only supports HTTP. Additional transports can be configured to WCF. WCF also offers configuration editor with GUI for monitoring performance, tracking messages and errors. This tool helps in reducing operation cost with out of box monitoring and tracing of data. Details at the end.

Demo:

Used MSFT base sample, Organized it and added personal notes and updates

1) Create a Blank Solution call WCF

2) Add a Project and Call it “Host”

3) Add a C# library file and call it “Host.cs”

· Add reference to System.ServiceModel.dll

4) Add another Project and call it “Client”

I ) Create WCF Host

5) Define Service Contract

// Define a service contract.

[ServiceContract(Namespace = "http://Microsoft.Demo.WCF")]

public interface IMath

{

[OperationContract]

double Add(double n1, double n2);

}

Attribute “ServiceContract” marks the Interface to carry metadata for WCF.

Attribute “OperationContract” is equivalent to “Public” keyword. Client can access the service.

PS: Since the datatype used in simple we have not explicityly defined “DataContract”. For complex datatyype it is important to define DataContract explicitly. It is also a good practice to keep “ServiceContact” and “DataContract” as separate implementation.

6) Implement the service

Implementing service contract is a rather easy process. Just use a C# (VB) class and implement the service interface. This is the part where the logic behind the service is implemented.

//Implement the service

public class MathService : IMath

{

public double Add(double n1, double n2)

{

double result = n1 + n2;

Console.WriteLine("Return: {0}", result);

return result;

}

7) Create Host for the service

Till now the service is in form of library class. We will need a host to host the service. As mentioned earlier, there are 3 options:

a. Create Self Host

b. Use IIS

c. Use WAS

In this demo we will create our own host because it is easy to Debug. Before we create a host, we need to define the address where the service will reside.

This step will contain following five steps:

d. Create a base address for the service.

e. Create a service host for the service.

f. Add a service endpoint

g. Enable metadata exchange.

h. Open the service host

// Step d

Uri baseAddress = new Uri("http://localhost:8000/DemoWCF/Service");

// Step e

ServiceHost serviceHost = new ServiceHost(typeof(MathService), baseAddress);

try

{

// Step f

serviceHost.AddServiceEndpoint(

typeof(IMath),

new WSHttpBinding(),

"MathService");

// Step g

ServiceMetadataBehavior smb = new ServiceMetadataBehavior();

smb.HttpGetEnabled = true;

serviceHost.Description.Behaviors.Add(smb);

This step is required to run the svcutil command later on to download 2 client files (config and class). Otherwise user may get following error:

“ There was no endpoint listening at http://localhost:8000/DemoWCF/service that could accept the message. This is often caused by an incorrect address or SOAP action. See InnerException, if present, for more details.

The remote server returned an error: (404) Not Found.”

This step is optional if user decides to manually create both client files.

// Step h

serviceHost.Open();

Console.WriteLine("The service is ready.");

Console.WriteLine("Press

Console.WriteLine();

Console.ReadLine();

serviceHost.Close();

II) Create WCF client 8) Run svcutil command to generate client class and config file

8) Run svcutil command to generate client class and config file

C:\Program Files\Microsoft Visual Studio 8\Common7\IDE> svcutil /language:cs /out

:generatedClient.cs http://localhost:8000/DemoWCF/service

2 generated files will be : output.config and generatedClient.cs.

9) Add both of these clients to “Client” Project. The complete solution will look something like the imaage below. Note the added references. Rename them to app.config and MathClient.cs

10) Add the following code to Client.CS

namespace Microsoft.Demo.WCF

{

class Client

{

static void Main()

{

try

{

//Create an EndpointAddress instance for the base address

This Endpoint address should be concatenation of baseAddress variable in Host class and stringaddress paramter in AddEndPoint method of Host class. EndpointAddress epAddress = new EndpointAddress("http://localhost:8000/DemoWCF/Service/MathService");

EndpointAddress epAddress = new EndpointAddress("http://localhost:8000/DemoWCF/Service/MathService");

MathClient client = new MathClient(new WSHttpBinding(), epAddress);

//Call the contract implementation in Host class from Client class.

double value1 = 29;

double value2 = 16.00;

double result = client.Add(value1, value2);

Console.WriteLine("Add({0},{1}) = {2}", value1, value2, result);

//Close WCF client

client.Close();

Console.WriteLine();

Console.WriteLine("Press

Console.ReadLine();

}

This is simple scenario where we have single service contract , single implementation and single endpoint.

Scenario:

A new client comes in and wants to a new service contract called “Subtract”.

Two ways of doing this:

1) Add the new service “Subtract” in existing interface IMath

2) Create a new Interface IMath1 for “Subtract”

Add the new service “Subtract” in existing interface IMath

Most simplest of updates. But it does not gives us seperation between both as it is part of same interface. Implementation is still with same class and same service host and endpoint. Only thing that change is on the client side where the client calls “client.subtract” just like “client.Add” in the demo above.

Create a new Interface IMath1 for “Subtract”

Create a new interface say IMath1 that contains “Subtract”. Create a second class say “MathService1”. We can’t use the same class to implement 2 interfaces under the same namespace, so we will need to create a new class to implement the new interface (service contract). Another problem that comes with this is the need to have 2 service shosts. Each service host is tied to unique class (service contract implementation). Since we are using “self created” host we can’t create 2 service hosts to implement 2 unique classes.

This is one of the drawback of WCF. Unlike .net remoting where we can add multiple instants of service host under single host, we can’t do same in WCF.

Using IIS as host can help solve this issue.

Key Points

1) Each WCF service class implements at least one service contract, which defines the operations this service exposes.

2) For distributing different set of service to multiple numbers of clients, class can implement multiple endpoints each with a unique service contract.

3) Minimum 1 end point for each service host.

4) Each service host is tied up to only one implementation of contract (class)

5) To expose multiple services, you can open multiple service Host instances within the same host process.

5)

6) Although you can have multiple endpoints with a single service but only service type.

7) You can also dynamically create service with different implementing types. This can be achieved by dynamically creating new Service Hosts.

8) Each endpoint should have unique relative address

WCF Configuration Editor

This tool can be accessed from Visual Studio interface. Go to Tools ---->WCF Configuration Editor.

Read the help file for details. In summary:

1) Open the config file that was generated after running svcutility. This will populate different placeholders in GUI with relevant data. Click on EndPoint folder to view existing configuration. This information can be changed for the GUI without even touching XML file

2) Click on Diagnostics folder and use Toggle switch to browse through various settings. Check out help file for details.

3) Closing the editor after changes will update the changes in the original app.config that was opened in first step. If we open config file, we see additional tags added to perform all the functions that we proposed in GUI.

4) Click on Host.exe file to start the service. Click on client.exe to start the client. All the events will be logged in file(s) whose location(s) can be set from "Diagnostic" folder in GUI.

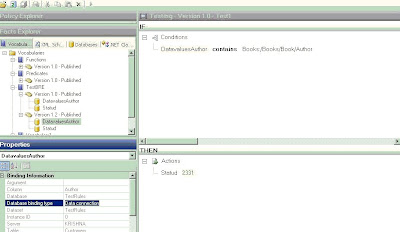

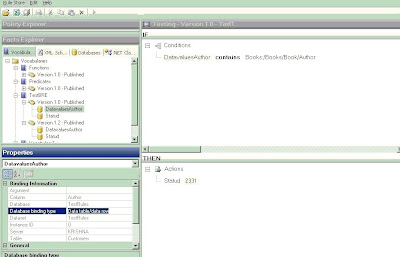

Image 2 (Database Binding type as DataConnection )

Image 2 (Database Binding type as DataConnection )

_511.gif)

_530.gif)